|

imate

C++/CUDA Reference

|

|

imate

C++/CUDA Reference

|

An interface to CUDA linrary to facilitate working with CUDA, such as memory allocation, copy data to and from device, etc. This class contains all public static functions and serves as a namespace. More...

#include <cuda_interface.h>

Static Public Member Functions | |

| static ArrayType * | alloc (const LongIndexType array_size) |

| Allocates memory on gpu device. This function creates a pointer and returns it. More... | |

| static void | alloc (ArrayType *&device_array, const LongIndexType array_size) |

| Allocates memory on gpu device. This function uses an existing given pointer. More... | |

| static void | alloc_bytes (void *&device_array, const size_t num_bytes) |

| Allocates memory on gpu device. This function uses an existing given pointer. More... | |

| static void | copy_to_device (const ArrayType *host_array, const LongIndexType array_size, ArrayType *device_array) |

| Copies memory on host to device memory. More... | |

| static void | del (void *device_array) |

Deletes memory on gpu device if its pointer is not NULL, then sets the pointer to NULL. More... | |

| static void | set_device (int device_id) |

| Sets the current device in multi-gpu applications. More... | |

| static int | get_device () |

| Gets the current device in multi-gpu applications. More... | |

An interface to CUDA linrary to facilitate working with CUDA, such as memory allocation, copy data to and from device, etc. This class contains all public static functions and serves as a namespace.

Definition at line 36 of file cuda_interface.h.

|

static |

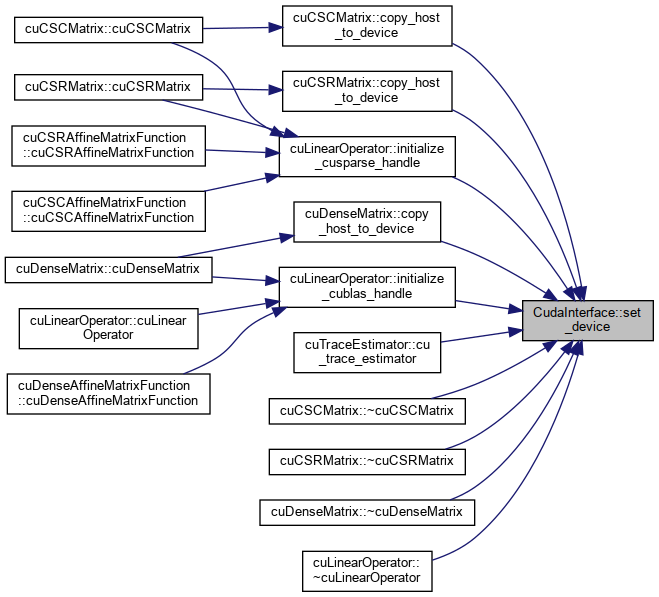

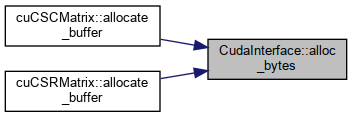

Allocates memory on gpu device. This function uses an existing given pointer.

| [in,out] | device_array | A pointer to the device memory to be allocated |

| [in] | array_size | Size of the array to be allocated. |

Definition at line 76 of file cuda_interface.cu.

References cudaMalloc().

|

static |

Allocates memory on gpu device. This function creates a pointer and returns it.

| [in] | array_size | Size of the array to be allocated. |

Definition at line 36 of file cuda_interface.cu.

References cudaMalloc().

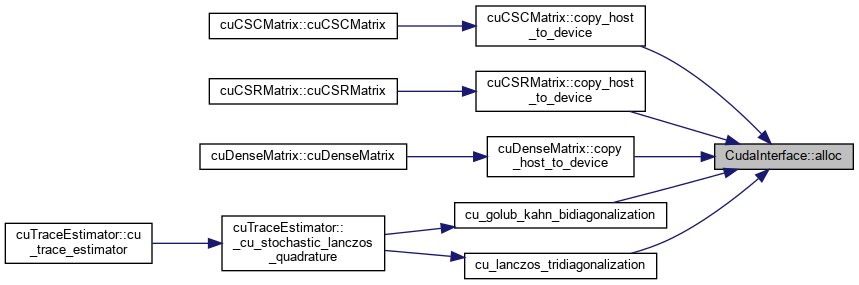

Referenced by cuCSCMatrix< DataType >::copy_host_to_device(), cuCSRMatrix< DataType >::copy_host_to_device(), cuDenseMatrix< DataType >::copy_host_to_device(), cu_golub_kahn_bidiagonalization(), and cu_lanczos_tridiagonalization().

|

static |

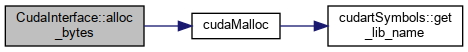

Allocates memory on gpu device. This function uses an existing given pointer.

| [in,out] | device_array | A pointer to the device memory to be allocated |

| [in] | num_bytes | Number of bytes of the array to be allocated. |

Definition at line 115 of file cuda_interface.cu.

References cudaMalloc().

Referenced by cuCSCMatrix< DataType >::allocate_buffer(), and cuCSRMatrix< DataType >::allocate_buffer().

|

static |

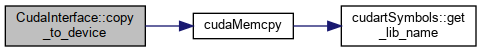

Copies memory on host to device memory.

| [in] | host_array | Pointer of 1D array memory on host |

| [in] | array_size | The size of array on host. |

| [out] | device_array | Pointer to the destination memory on device. |

Definition at line 142 of file cuda_interface.cu.

References cudaMemcpy().

Referenced by cuCSCMatrix< DataType >::copy_host_to_device(), cuCSRMatrix< DataType >::copy_host_to_device(), cuDenseMatrix< DataType >::copy_host_to_device(), cu_golub_kahn_bidiagonalization(), cu_lanczos_tridiagonalization(), and cuOrthogonalization< DataType >::orthogonalize_vectors().

|

static |

Deletes memory on gpu device if its pointer is not NULL, then sets the pointer to NULL.

| [in,out] | device_array | A pointer to memory on device to be deleted. This pointer will be set to NULL. |

Definition at line 166 of file cuda_interface.cu.

References cudaFree().

Referenced by cuCSCMatrix< DataType >::allocate_buffer(), cuCSRMatrix< DataType >::allocate_buffer(), cu_golub_kahn_bidiagonalization(), cu_lanczos_tridiagonalization(), cuCSCMatrix< DataType >::~cuCSCMatrix(), cuCSRMatrix< DataType >::~cuCSRMatrix(), and cuDenseMatrix< DataType >::~cuDenseMatrix().

|

static |

Gets the current device in multi-gpu applications.

0 to num_gpu_devices-1 Definition at line 206 of file cuda_interface.cu.

References cudaGetDevice().

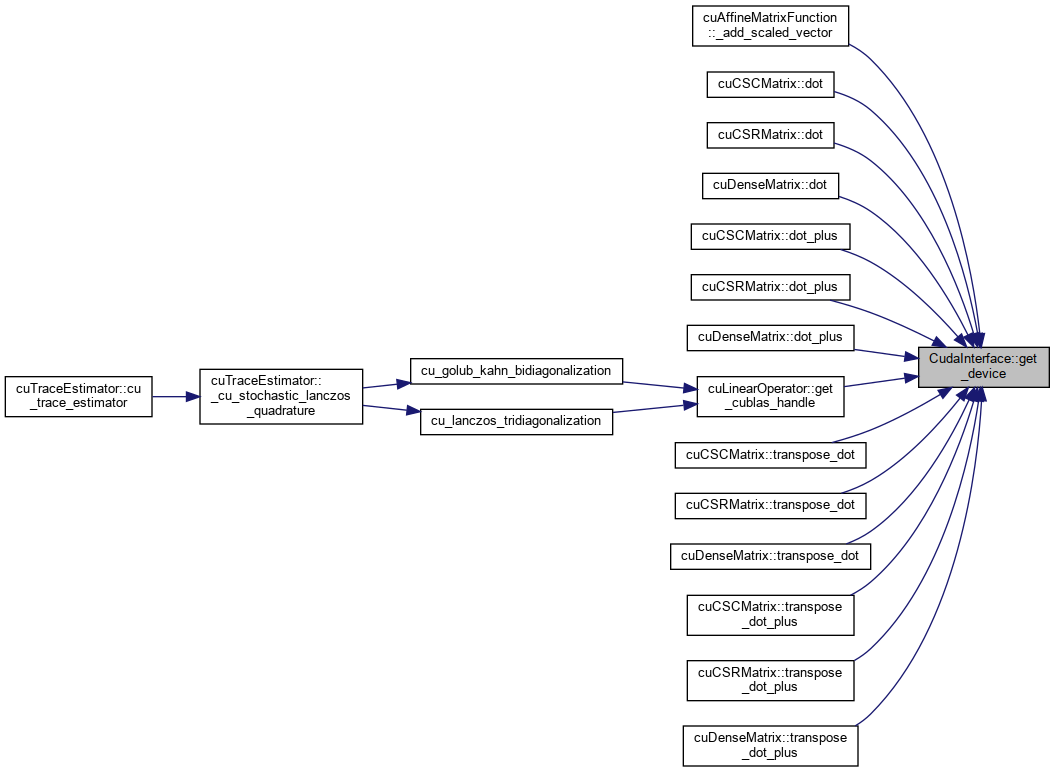

Referenced by cuAffineMatrixFunction< DataType >::_add_scaled_vector(), cuCSCMatrix< DataType >::dot(), cuCSRMatrix< DataType >::dot(), cuDenseMatrix< DataType >::dot(), cuCSCMatrix< DataType >::dot_plus(), cuCSRMatrix< DataType >::dot_plus(), cuDenseMatrix< DataType >::dot_plus(), cuLinearOperator< DataType >::get_cublas_handle(), cuCSCMatrix< DataType >::transpose_dot(), cuCSRMatrix< DataType >::transpose_dot(), cuDenseMatrix< DataType >::transpose_dot(), cuCSCMatrix< DataType >::transpose_dot_plus(), cuCSRMatrix< DataType >::transpose_dot_plus(), and cuDenseMatrix< DataType >::transpose_dot_plus().

|

static |

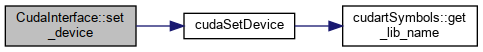

Sets the current device in multi-gpu applications.

| [in] | device_id | The id of the device to switch to. The id is a number from 0 to num_gpu_devices-1 |

Definition at line 188 of file cuda_interface.cu.

References cudaSetDevice().

Referenced by cuCSCMatrix< DataType >::copy_host_to_device(), cuCSRMatrix< DataType >::copy_host_to_device(), cuDenseMatrix< DataType >::copy_host_to_device(), cuTraceEstimator< DataType >::cu_trace_estimator(), cuLinearOperator< DataType >::initialize_cublas_handle(), cuLinearOperator< DataType >::initialize_cusparse_handle(), cuCSCMatrix< DataType >::~cuCSCMatrix(), cuCSRMatrix< DataType >::~cuCSRMatrix(), cuDenseMatrix< DataType >::~cuDenseMatrix(), and cuLinearOperator< DataType >::~cuLinearOperator().