|

imate

C++/CUDA Reference

|

|

imate

C++/CUDA Reference

|

A static class for matrix-vector operations, which are similar to the level-2 operations of the BLAS library. This class acts as a templated namespace, where all member methods are public and static. More...

#include <c_matrix_operations.h>

Static Public Member Functions | |

| static void | dense_matvec (const DataType *A, const DataType *b, const LongIndexType num_rows, const LongIndexType num_columns, const FlagType A_is_row_major, DataType *c) |

| Computes the matrix vector multiplication \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is a dense matrix. More... | |

| static void | dense_matvec_plus (const DataType *A, const DataType *b, const DataType alpha, const LongIndexType num_rows, const LongIndexType num_columns, const FlagType A_is_row_major, DataType *c) |

| Computes the operation \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is a dense matrix. More... | |

| static void | dense_transposed_matvec (const DataType *A, const DataType *b, const LongIndexType num_rows, const LongIndexType num_columns, const FlagType A_is_row_major, DataType *c) |

| Computes matrix vector multiplication \(\boldsymbol{c} = \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is dense, and \( \mathbf{A}^{\intercal} \) is the transpose of the matrix \( \mathbf{A} \). More... | |

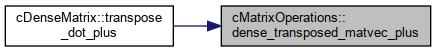

| static void | dense_transposed_matvec_plus (const DataType *A, const DataType *b, const DataType alpha, const LongIndexType num_rows, const LongIndexType num_columns, const FlagType A_is_row_major, DataType *c) |

| Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is dense, and \( \mathbf{A}^{\intercal} \) is the transpose of the matrix \( \mathbf{A} \). More... | |

| static void | csr_matvec (const DataType *A_data, const LongIndexType *A_column_indices, const LongIndexType *A_index_pointer, const DataType *b, const LongIndexType num_rows, DataType *c) |

| Computes \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

| static void | csr_matvec_plus (const DataType *A_data, const LongIndexType *A_column_indices, const LongIndexType *A_index_pointer, const DataType *b, const DataType alpha, const LongIndexType num_rows, DataType *c) |

| Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

| static void | csr_transposed_matvec (const DataType *A_data, const LongIndexType *A_column_indices, const LongIndexType *A_index_pointer, const DataType *b, const LongIndexType num_rows, const LongIndexType num_columns, DataType *c) |

| Computes \(\boldsymbol{c} =\mathbf{A}^{\intercal} \boldsymbol{b}\) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

| static void | csr_transposed_matvec_plus (const DataType *A_data, const LongIndexType *A_column_indices, const LongIndexType *A_index_pointer, const DataType *b, const DataType alpha, const LongIndexType num_rows, DataType *c) |

| Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b}\) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

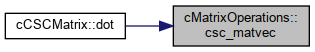

| static void | csc_matvec (const DataType *A_data, const LongIndexType *A_row_indices, const LongIndexType *A_index_pointer, const DataType *b, const LongIndexType num_rows, const LongIndexType num_columns, DataType *c) |

| Computes \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

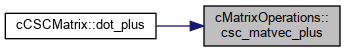

| static void | csc_matvec_plus (const DataType *A_data, const LongIndexType *A_row_indices, const LongIndexType *A_index_pointer, const DataType *b, const DataType alpha, const LongIndexType num_columns, DataType *c) |

| Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

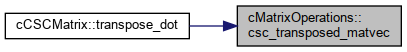

| static void | csc_transposed_matvec (const DataType *A_data, const LongIndexType *A_row_indices, const LongIndexType *A_index_pointer, const DataType *b, const LongIndexType num_columns, DataType *c) |

| Computes \(\boldsymbol{c} =\mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

| static void | csc_transposed_matvec_plus (const DataType *A_data, const LongIndexType *A_row_indices, const LongIndexType *A_index_pointer, const DataType *b, const DataType alpha, const LongIndexType num_columns, DataType *c) |

| Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector. More... | |

| static void | create_band_matrix (const DataType *diagonals, const DataType *supdiagonals, const IndexType non_zero_size, const FlagType tridiagonal, DataType **matrix) |

Creates bi-diagonal or symmetric tri-diagonal matrix from the diagonal array (diagonals) and off-diagonal array (supdiagonals). More... | |

A static class for matrix-vector operations, which are similar to the level-2 operations of the BLAS library. This class acts as a templated namespace, where all member methods are public and static.

This class implements matrix-ector multiplication for three types of matrices:

For each of the above matrix types, there are four kinds of matrix vector multiplications implemented.

dot : performs \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \).dot_plus : performs \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \).transpose_dot : performs \( \boldsymbol{c} = \mathbf{A}^{\intercal} \boldsymbol{b} \).transpose_dot_plus : performs \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b} \).Definition at line 56 of file c_matrix_operations.h.

|

static |

Creates bi-diagonal or symmetric tri-diagonal matrix from the diagonal array (diagonals) and off-diagonal array (supdiagonals).

The output is written in place (in matrix). The output is only written up to the non_zero_size element, that is: matrix[:non_zero_size,:non_zero_size] is filled, and the rest is assumed to be zero.

Depending on tridiagonal, the matrix is upper bi-diagonal or symmetric tri-diagonal.

| [in] | diagonals | An array of length n. All elements diagonals create the diagonals of matrix. |

| [in] | supdiagonals | An array of length n. Elements supdiagonals[0:-1] create the upper off-diagonal of matrix, making matrix an upper bi-diagonal matrix. In addition, if tridiagonal is set to 1, the lower off-diagonal is also created similar to the upper off-diagonal, making matrix a symmetric tri-diagonal matrix. |

| [in] | non_zero_size | Up to the matrix[:non_zero_size,:non_zero_size] of matrix will be written. At most, non_zero_size can be n, which is the size of diagonals array and the size of the square matrix. If non_zero_size is less than n, it is due to the fact that either diagonals or supdiagonals has zero elements after the size element (possibly due to early termination of Lanczos iterations method). |

| [in] | tridiagonal | Boolean. If set to 0, the matrix T becomes upper bi-diagonal. If set to 1, the matrix becomes symmetric tri-diagonal. |

| [out] | matrix | A 2D matrix (written in place) of the shape |

Definition at line 1021 of file c_matrix_operations.cpp.

|

static |

Computes \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

| [in] | A_data | CSC format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_row_indices | CSC format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSC format index pointer. The length of this array is one plus the number of columns of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | num_rows | Number of rows of the matrix A. |

| [in] | num_columns | Number of columns of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 735 of file c_matrix_operations.cpp.

Referenced by cCSCMatrix< DataType >::dot().

|

static |

Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

| [in] | A_data | CSC format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_row_indices | CSC format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSC format index pointer. The length of this array is one plus the number of columns of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| [in] | num_columns | Number of columns of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [in,out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 801 of file c_matrix_operations.cpp.

Referenced by cCSCMatrix< DataType >::dot_plus().

|

static |

Computes \(\boldsymbol{c} =\mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float, the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A_data | CSC format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_row_indices | CSC format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSC format index pointer. The length of this array is one plus the number of columns of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| num_columns | Number of columns of the matrix A. This is essentially the size of A_index_pointer array minus one. | |

| [out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 872 of file c_matrix_operations.cpp.

Referenced by cCSCMatrix< DataType >::transpose_dot().

|

static |

Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse column (CSC) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A_data | CSC format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_row_indices | CSC format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSC format index pointer. The length of this array is one plus the number of columns of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| num_columns | Number of columns of the matrix A. This is essentially the size of A_index_pointer array minus one. | |

| [in,out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 943 of file c_matrix_operations.cpp.

Referenced by cCSCMatrix< DataType >::transpose_dot_plus().

|

static |

Computes \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float, the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A_data | CSR format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_column_indices | CSR format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSR format index pointer. The length of this array is one plus the number of rows of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | num_rows | Number of rows of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 469 of file c_matrix_operations.cpp.

Referenced by cCSRMatrix< DataType >::dot().

|

static |

Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A_data | CSR format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_column_indices | CSR format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSR format index pointer. The length of this array is one plus the number of rows of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| [in] | num_rows | Number of rows of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [in,out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 540 of file c_matrix_operations.cpp.

Referenced by cCSRMatrix< DataType >::dot_plus().

|

static |

Computes \(\boldsymbol{c} =\mathbf{A}^{\intercal} \boldsymbol{b}\) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

| [in] | A_data | CSR format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_column_indices | CSR format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSR format index pointer. The length of this array is one plus the number of rows of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | num_rows | Number of rows of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [in] | num_columns | Number of columns of the matrix A. |

| [out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 606 of file c_matrix_operations.cpp.

Referenced by cCSRMatrix< DataType >::transpose_dot().

|

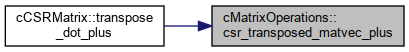

static |

Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b}\) where \( \mathbf{A} \) is compressed sparse row (CSR) matrix and \( \boldsymbol{b} \) is a dense vector. The output \( \boldsymbol{c} \) is a dense vector.

| [in] | A_data | CSR format data array of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_column_indices | CSR format column indices of the sparse matrix. The length of this array is the nnz of the matrix. |

| [in] | A_index_pointer | CSR format index pointer. The length of this array is one plus the number of rows of the matrix. Also, the first element of this array is 0, and the last element is the nnz of the matrix. |

| [in] | b | Column vector with same size of the number of columns of A. |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| [in] | num_rows | Number of rows of the matrix A. This is essentially the size of A_index_pointer array minus one. |

| [in,out] | c | Output column vector with the same size as b. This array is written in-place. |

Definition at line 672 of file c_matrix_operations.cpp.

Referenced by cCSRMatrix< DataType >::transpose_dot_plus().

|

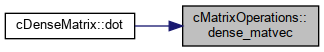

static |

Computes the matrix vector multiplication \( \boldsymbol{c} = \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is a dense matrix.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float, the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A | 1D array that represents a 2D dense array with either C (row) major ordering or Fortran (column) major ordering. The major ordering should de defined by A_is_row_major flag. |

| [in] | b | Column vector |

| [in] | num_rows | Number of rows of A |

| [in] | num_columns | Number of columns of A |

| [in] | A_is_row_major | Boolean, can be 0 or 1 as follows:

|

| [out] | c | The output column vector (written in-place). |

Definition at line 61 of file c_matrix_operations.cpp.

Referenced by cDenseMatrix< DataType >::dot().

|

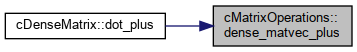

static |

Computes the operation \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A} \boldsymbol{b} \) where \( \mathbf{A} \) is a dense matrix.

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A | 1D array that represents a 2D dense array with either C (row) major ordering or Fortran (column) major ordering. The major ordering should de defined by A_is_row_major flag. |

| [in] | b | Column vector |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| [in] | num_rows | Number of rows of A |

| [in] | num_columns | Number of columns of A |

| [in] | A_is_row_major | Boolean, can be 0 or 1 as follows:

|

| [in,out] | c | The output column vector (written in-place). |

Definition at line 181 of file c_matrix_operations.cpp.

Referenced by cDenseMatrix< DataType >::dot_plus().

|

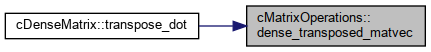

static |

Computes matrix vector multiplication \(\boldsymbol{c} = \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is dense, and \( \mathbf{A}^{\intercal} \) is the transpose of the matrix \( \mathbf{A} \).

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float, the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A | 1D array that represents a 2D dense array with either C (row) major ordering or Fortran (column) major ordering. The major ordering should de defined by A_is_row_major flag. |

| [in] | b | Column vector |

| [in] | num_rows | Number of rows of A |

| [in] | num_columns | Number of columns of A |

| [in] | A_is_row_major | Boolean, can be 0 or 1 as follows:

|

| [out] | c | The output column vector (written in-place). |

Definition at line 278 of file c_matrix_operations.cpp.

Referenced by cDenseMatrix< DataType >::transpose_dot().

|

static |

Computes \( \boldsymbol{c} = \boldsymbol{c} + \alpha \mathbf{A}^{\intercal} \boldsymbol{b} \) where \( \mathbf{A} \) is dense, and \( \mathbf{A}^{\intercal} \) is the transpose of the matrix \( \mathbf{A} \).

The reduction variable (here, sum ) is of the type long double. This is becase when DataType is float the summation loses the precision, especially when the vector size is large. It seems that using long double is slightly faster than using double. The advantage of using a type with larger bits for the reduction variable is only sensible if the compiler is optimized with -O2 or -O3 flags.

| [in] | A | 1D array that represents a 2D dense array with either C (row) major ordering or Fortran (column) major ordering. The major ordering should de defined by A_is_row_major flag. |

| [in] | b | Column vector |

| [in] | alpha | A scalar that scales the matrix vector multiplication. |

| [in] | num_rows | Number of rows of A |

| [in] | num_columns | Number of columns of A |

| [in] | A_is_row_major | Boolean, can be 0 or 1 as follows:

|

| [in,out] | c | The output column vector (written in-place). |

Definition at line 371 of file c_matrix_operations.cpp.

Referenced by cDenseMatrix< DataType >::transpose_dot_plus().