Comparison of Randomized Algorithms#

imate implements various deterministic and randomized algorithms on dense and sparse matrices. The goal of the following numerical experiments is to compare the performance, scalability, and accuracy of these algorithms.

Test Description#

The following numerical experiments aims to estimate

and

where \(\mathbf{A}\) is symmetric and positive-definite. The above quantities are computationally expensive expressions that frequently appears in the likelihood functions and their Jacobian and Hessian.

Algorithms#

The following Algorithms were tested on Intel® Xeon CPU E5-2670 v3 with 24 threads.

- Cholesky Decomposition#

This method is implemented by the following functions:

imate.logdet(method=’cholesky’) to compute (1).

imate.traceinv(method=’cholesky’) to compute (2).

The complexity of computing (1) for matrices obtained from 1D, 2D, and 3D grids are respectively \(\mathcal{O}(n)\), \(\mathcal{O}(n^{\frac{3}{2}})\), and \(\mathcal{O}(n^2)\) where \(n\) is the matrix size. The complexity of computing (2) for sparse matrices is \(\mathcal{O}(\rho n^2)\) where \(\rho\) is the sparse matrix density.

- Hutchinson Algorithm#

This method is only applied to (2) and implemented by imate.traceinv(method=’hutchinson’) function. The complexity of this method is:

(3)#\[\mathcal{O}(\mathrm{nnz}(\mathbf{A})s),\]where \(s\) is the number of Monte-Carlo iterations in the algorithm and \(\rho\) is the sparse matrix density. In this experiment, \(s = 80\).

- Stochastic Lanczos Quadrature Algorithm#

This method is implemented by:

imate.logdet(method=’cholesky’) to compute (1).

imate.traceinv(method=’cholesky’) to compute (2).

The complexity of this method is:

(4)#\[\mathcal{O} \left( (\mathrm{nnz}(\mathbf{A}) l + n l^2) s \right),\]where \(l\) is the number of Lanczos iterations, and \(s\) is the number of Monte-Carlo iterations. The numerical experiment is performed with \(l=80\) and \(s=200\).

Arithmetic Types#

The benchmark test also examines the performance and accuracy of imate on various arithmetic types of the matrix data. To this end, the matrices that are described below are re-cast into 32-bit, 64-bit, and 128-bit floating point types.

Note

Supporting 128-bit data types is one of the features of imate, which is often not available in numerical libraries, such as BLAS, OpenBLAS, Cholmod, etc.

Test on Simple Matrices#

The Gramian matrix \(\mathbf{A} = \mathbf{B}^{\intercal} \mathbf{B}\) is considered for the test where \(\mathbf{B}\) is a sparse bi-diagonal Toeplitz matrix defined by

The above matrix can be generated by imate.toeplitz() function. In this experiment, \(a = 2\), \(b = 1\), and the matrix size is varied by powers of two, \(n = 2^8, 2^9, \dots, 2^{14}\).

The Cholesky factor of \(\mathbf{A}\) is \(\mathbf{B}^{\intercal}\). Also, \(\mathrm{nnz}(\mathbf{A}) = 3n\). An advantage of using the above matrix is that an analytic formula for (1) and (2) is known. Namely,

See imate.sample_matrices.toeplitz_logdet() for details. Also, if \(n \gg 1\), then

where \(q = b/a\). See imate.sample_matrices.toeplitz_traceinv() for details. The above analytic formulas are used as the benchmark solution to test the accuracy of the results.

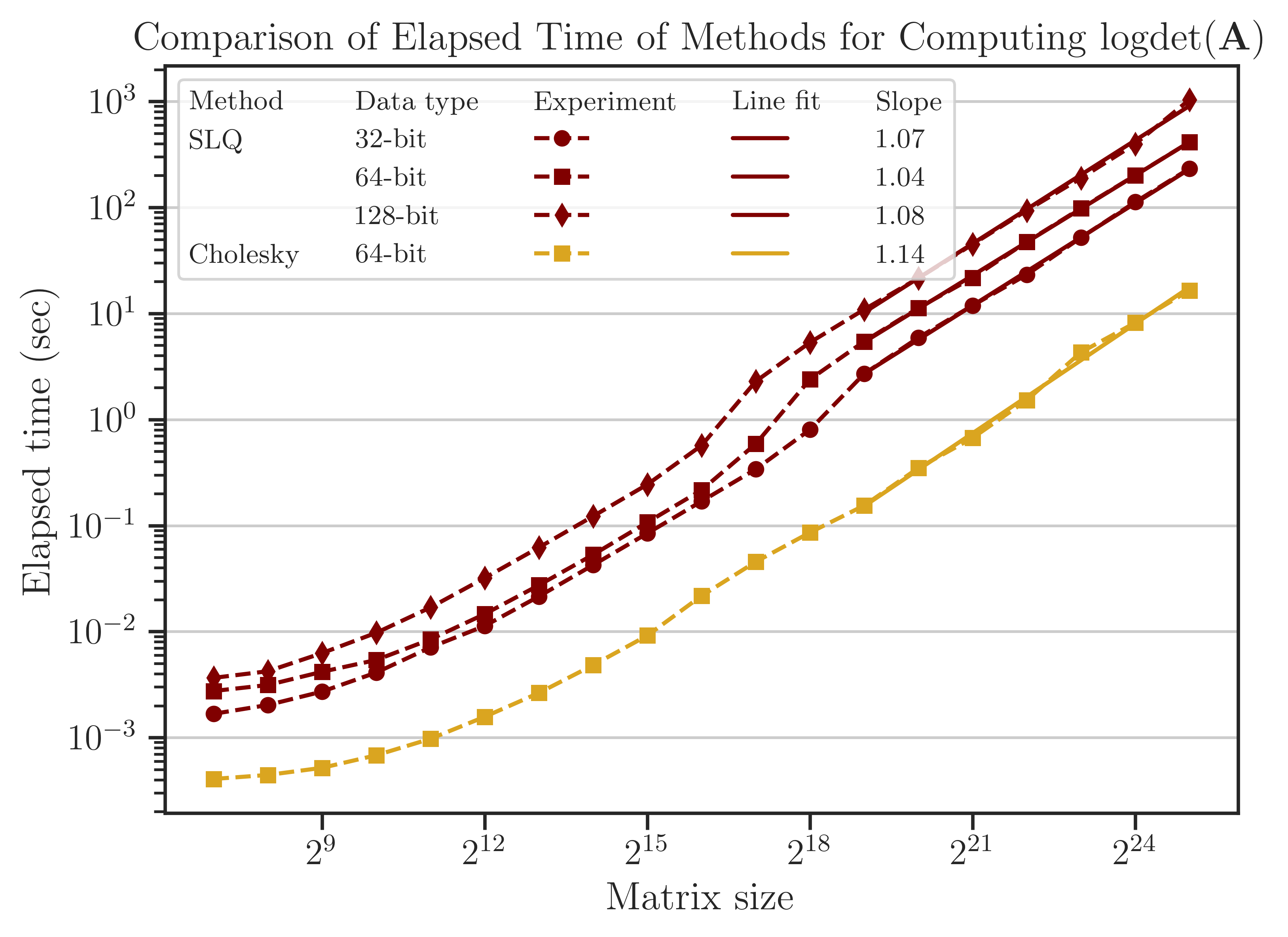

Elapsed Time of Computing Log-Determinant#

The elapsed (wall) time of the computations is shown in the figure below. The Cholesky method is faster than the SLQ method by an order of magnitude, hence it could be considered the preferred algorithm to compute log-determinant.

For large matrix sizes, \(n \geq 2^{19}\), the elapsed time can be related to the matrix size as \(t \propto n^{\alpha}\). It can be seen from the slope of the fitted lines in the figure that for the SLQ method, \(\alpha\) is close to 1. This result is consistent with the analytic complexity \(\mathcal{O}(n)\) derived from (4) and using \(\mathrm{nnz}(\mathbf{A}) \sim n\) for a tri-diagonal matrix. Similarly, for the Cholesky method, \(\alpha \approx 1\), which corresponds to the analytic complexity of computing log-determinant for matrices obtained from 1D meshes.

Note

The Cholesky method imate.logdet(method=’cholesky’) uses logdet function from SuiteSparse’s Cholmod library, which only supports 64-bit data types. Because of this, the performance test for 32-bit and 128-bit is not available. In contrast, imate’s implementation of randomized algorithms supports all data types shown in the above figure.

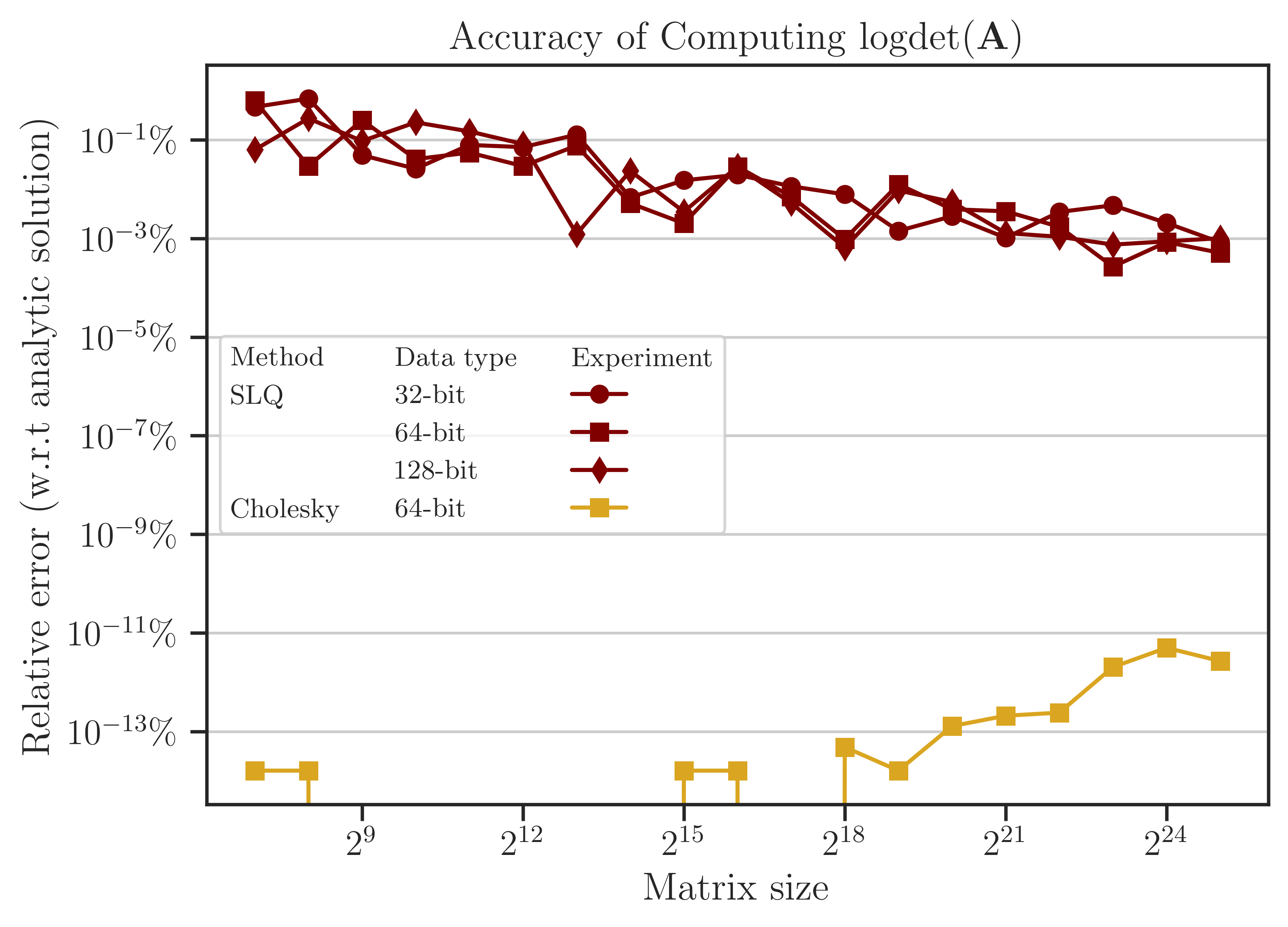

Accuracy of Computing Log-Determinant#

The error of computing (1) is shown in the figure below, which is obtained by comparing the results with the benchmark solution (5). The error of the Cholesky method is close to the machine precision (almost zero) since it is a direct method. On the other hand, the error of the SLQ method, as a randomized algorithm, is non-zero, yet very small. Such a remarkably small error of the SLQ method is due to the specific matrix used in the test and can be explained by the localized distribution of the eigenvalues of \(\mathbf{A}\) which makes the SLQ method very effective in estimating (1) with only a few Lanczos iterations. However, for practical matrices, usually, the error of randomized methods is larger than the figure below.

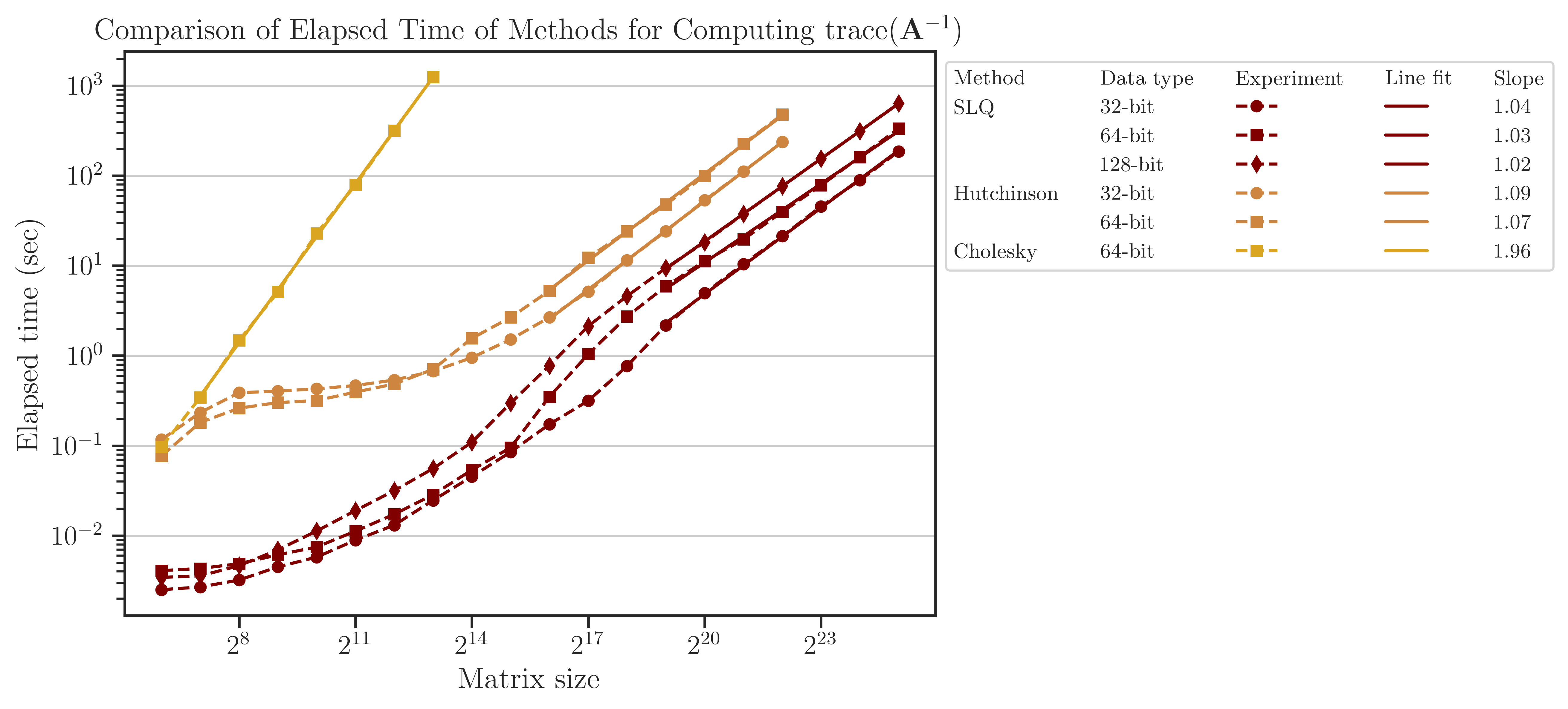

Elapsed Time of Computing Trace of Inverse#

The elapsed (wall) time of the computations is shown in the figure below. Unlike the above results for log-determinant, the Cholesky method here is significantly slower than the SLQ method. In fact, computing the trace of inverse of matrices is one of the applications where the performance of randomized methods surpasses the direct methods significantly.

The computational complexity can be quantified by the relation between the elapsed time and the matrix size as \(t \propto n^{\alpha}\). It can be seen from the slope of the fitted lines in the figure that for Hutchinson and SLQ methods, \(\alpha\) is close to 1. This result is consistent with the analytic complexity \(\mathcal{O}(n)\) derived from (3) and (4) and using \(\mathrm{nnz}(\mathbf{A}) \sim n\) for a tri-diagonal matrix. Similarly, for the Cholesky method, \(\alpha \approx 2\), which corresponds to the analytic complexity \(\mathcal{O}(n^2)\) for computing the trace of matrix inverse.

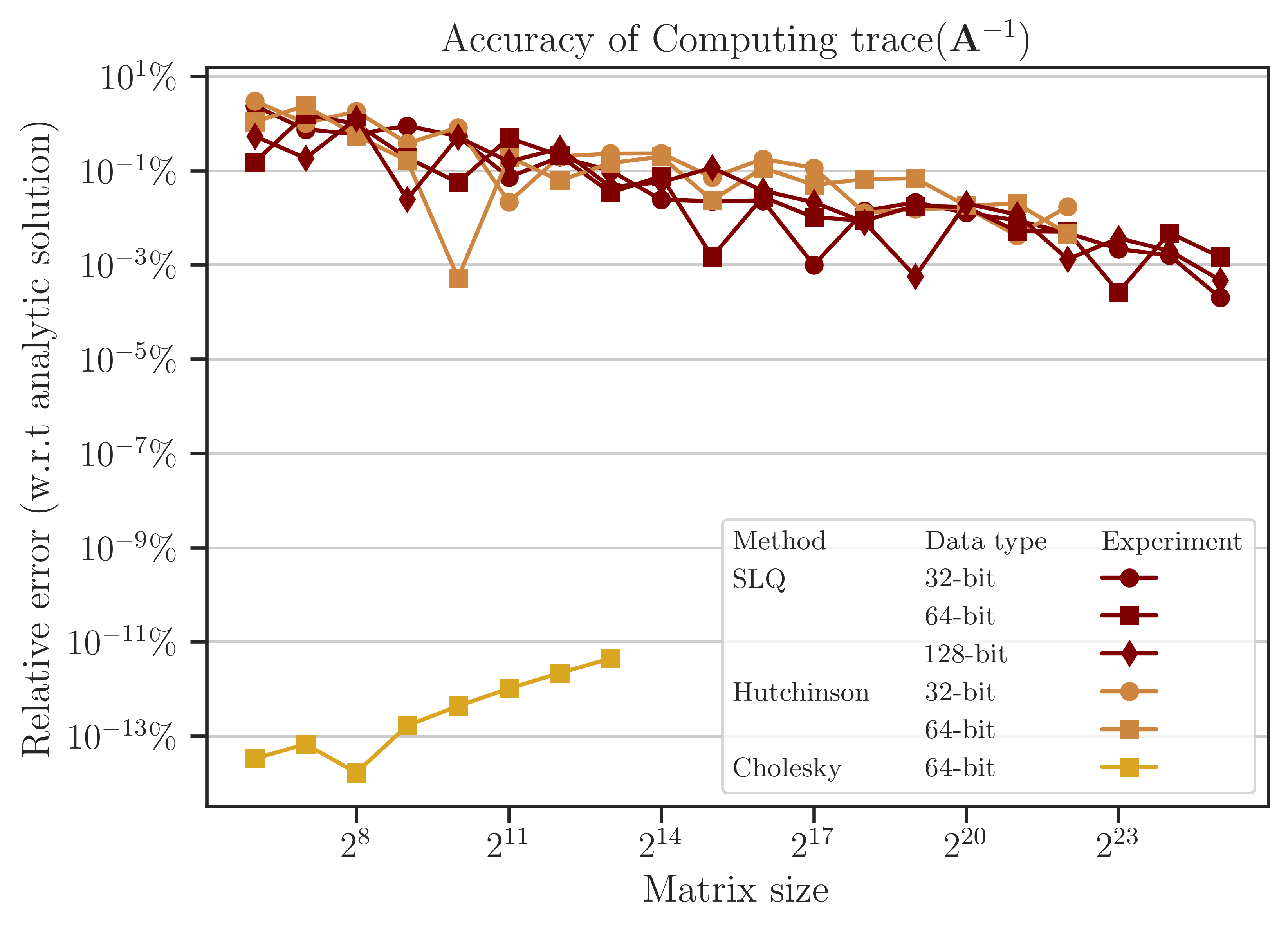

Accuracy of Computing Trace of Inverse#

The error of computing (2) is obtained from the benchmark solution (6) and shown in the figure below. The error of the Cholesky method is close to the machine precision (almost zero) since it is a direct method. On the other hand, the SLQ method, as a randomized algorithm, is non-zero. As mentioned previously, such a remarkable small error of the SLQ method is due to the specific matrix \(\mathbf{A}\) used in the test as its eigenvalues have a localized distribution, allowing the SLQ method to estimate (2) with a few Lanczos iterations.

Test on Practical Matrices#

The performance of algorithms was also examined on practical matrices. The table below shows the practical matrices used in the test, which are chosen from SuiteSparse Matrix Collection and are obtained from real applications. The matrices in the table below are all symmetric positive-definite. The number of nonzero elements (nnz) of these matrices increases approximately by a factor of 5 on average and their sparse density remains at the same order of magnitude (except for the first three).

Matrix Name |

Size |

nnz |

Density |

Application |

|---|---|---|---|---|

468 |

5,172 |

0.02 |

Structural Problem |

|

4,800 |

27,520 |

0.001 |

Electromagnetics |

|

19,366 |

134,208 |

0.0003 |

Structural Problem |

|

150,102 |

726,674 |

0.00003 |

Circuit Simulation |

|

525,825 |

3,674,625 |

0.00001 |

Computational Fluid Dynamics |

|

1,465,137 |

21,005,389 |

0.00001 |

Computational Fluid Dynamics |

|

2,911,419 |

127,729,899 |

0.00001 |

Structural Problem |

|

4,147,110 |

329,499,284 |

0.00002 |

Structural Problem |

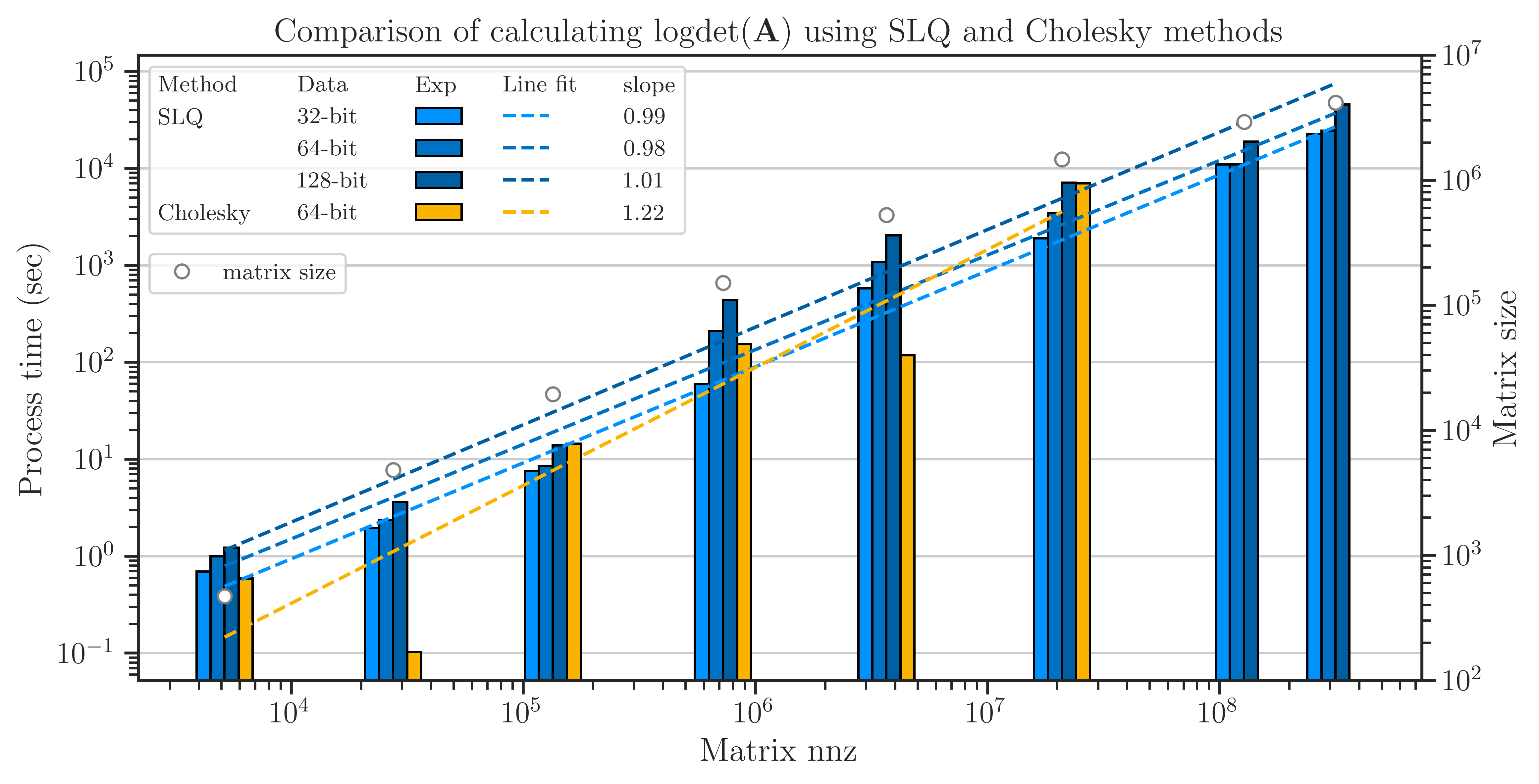

Process Time of Computing Log-Determinant#

The elapsed (wall) time of the computations is shown in the figure below. Unlike the results of the Toeplitz matrices, here, the result of comparing the Cholesky method and SLQ method is mixed with the Cholesky method often being marginally faster than the SLQ method. However, the SLQ method is scalable to larger matrices, whereas the Cholesky method often crashes at \(n > 10^8\).

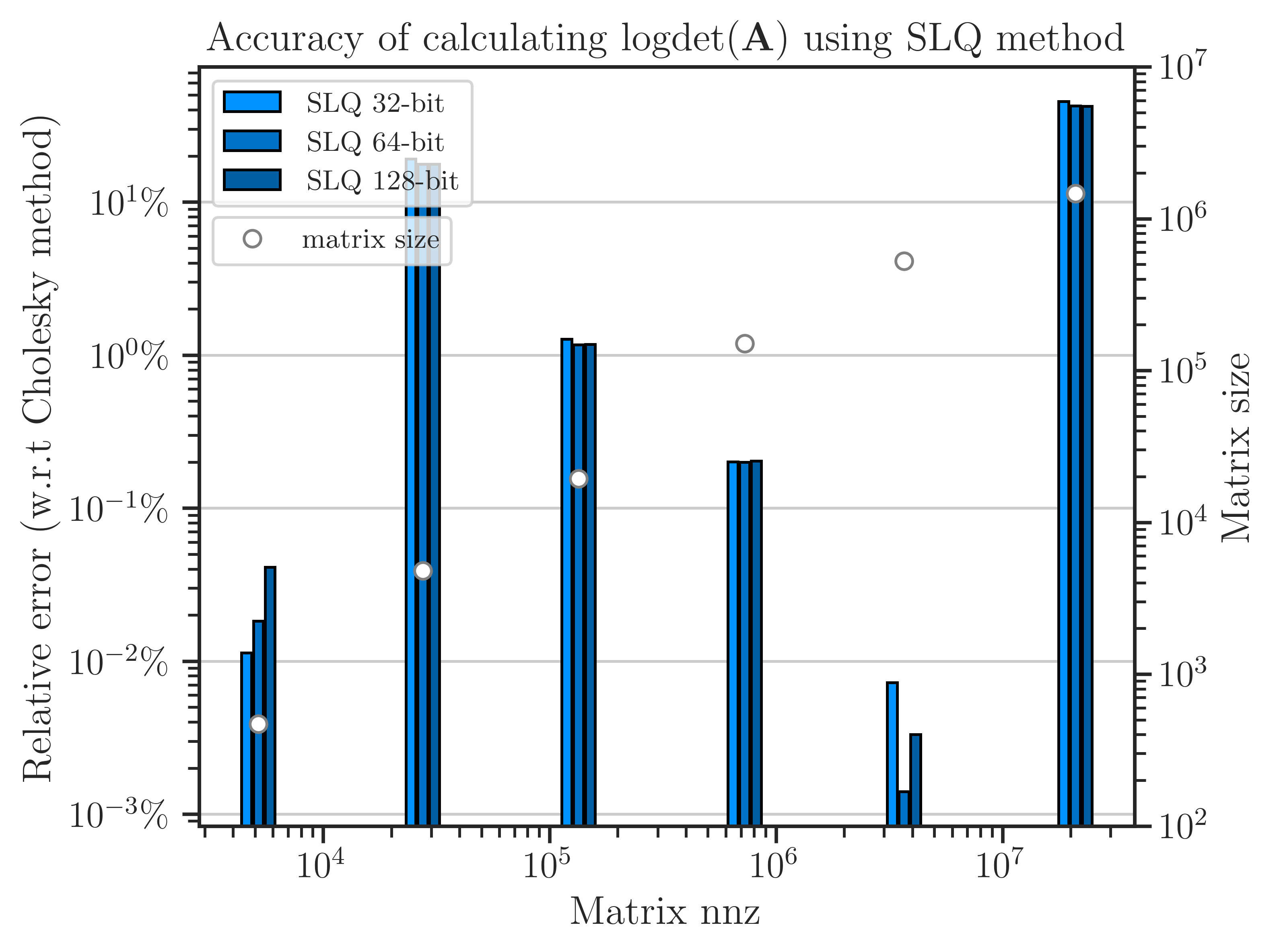

Accuracy of Computing Log-Determinant#

The error of computing log-determinant using the SLQ method is shown in the figure below, which is obtained by comparing with the result of the Cholesky method as a benchmark. For four of the matrices, the error is less than \(1 \%\). However, for the second and the sixth matrices in the figure, the error is above \(10 \%\). To reduce the error, a higher number of Monte-Carlo iterations are needed on these matrices.

Varying Algorithm Parameters#

A known issue of the Lanczos algorithm is that the eigenvectors computed during the recusance Lanczos iterations lose their orthogonality. A solution to this issue is the re-orthogonalization of the newly computed eigenvectors with either some or all previous eigenvectors, which is known as partial or full orthogonalization, respectively. imate supports both types of orthogonalization techniques.

In the following tests, the effect of re-orthogonalizations of the eigenvectors and the effect of varying the number of Lanczos iterations are examined.

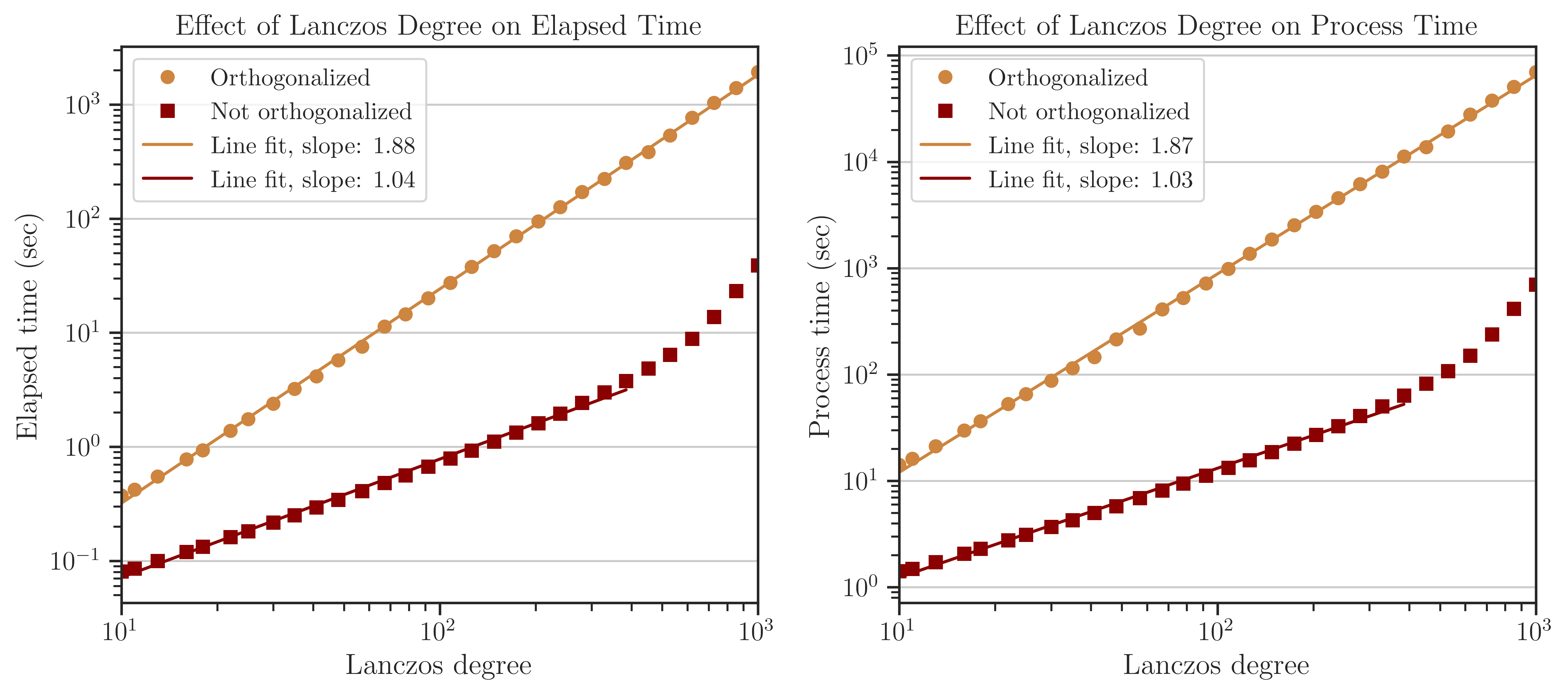

Process Time of Computing Log-Determinant#

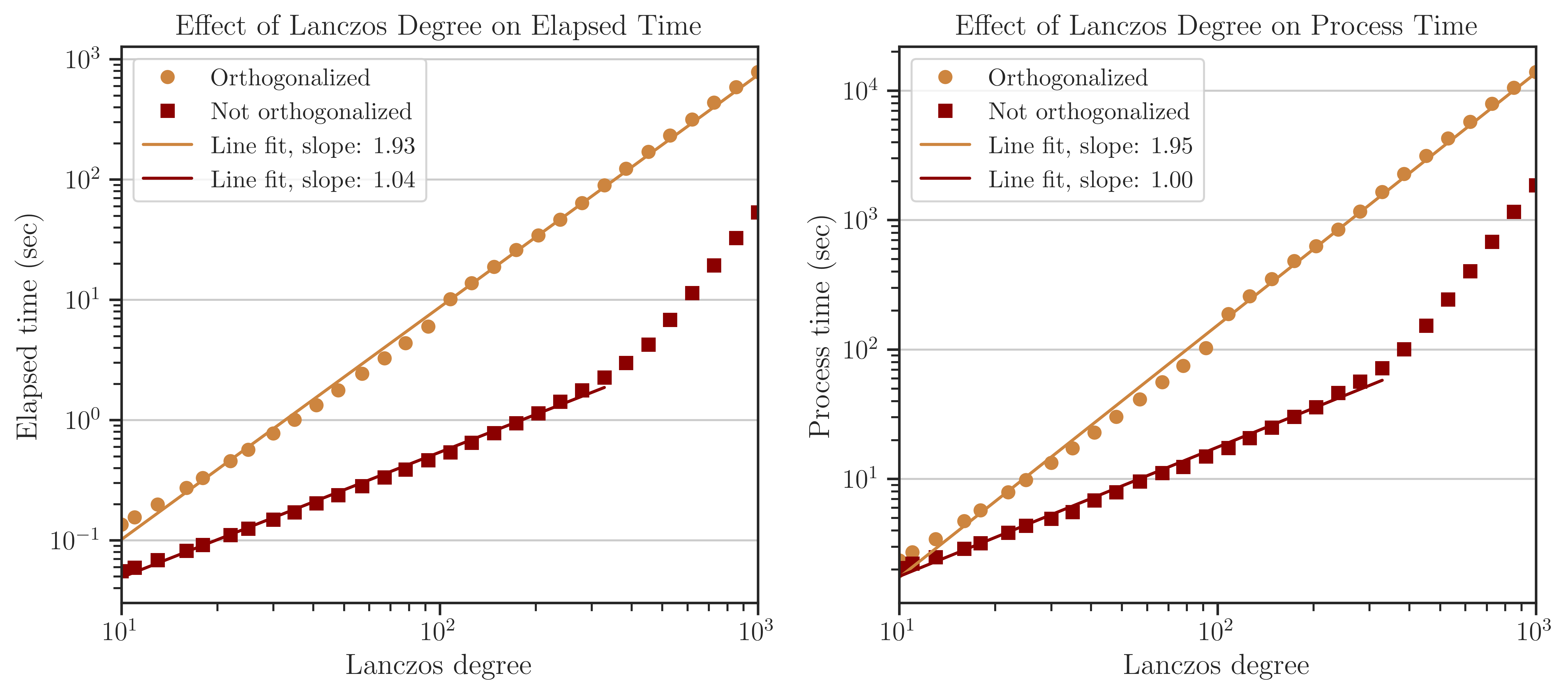

The test below corresponds to the tri-diagonal matrix \(\mathbf{A}\) (See Test on Simple Matrices) with \(n = 2^{14}\). The figure below shows the elapsed time (left) and process time (right) of computing the log-determinant versus the number of Lanczos iterations, \(l\) (also known as Lanczos degree). The orange and red curves respectively show the results with and without orthogonalization of the eigenvectors in the Lanczos algorithm. The processing time is proportional to \(\mathcal{O}(l)\) without orthogonalization, which is consistent with the complexity given in (4) when \(\mathrm{nnz}(\mathbf{A})\) is large. However, the processing time is almost \(\mathcal{O}(l^{2})\) with orthogonalization for \(l < 300\), which is consistent with the complexity of the Gram-Schmit orthogonalization process.

Note that at \(l > 300\), the processing time suddenly increases. This effect does not reflect a mathematical complexity, rather, is due to the fact that the Gram-Schmidt process is a memory-bounded process when the number of vectors is large. Specifically, when full-orthogonalization is used, all previous eigenvectors of the Lanczos iterations should be stored on the memory in a single large array. However, by increasing the number of eigenvectors, the size of the arrays grows larger than the memory bandwidth of the CPU cache, making the memory access significantly inefficient.

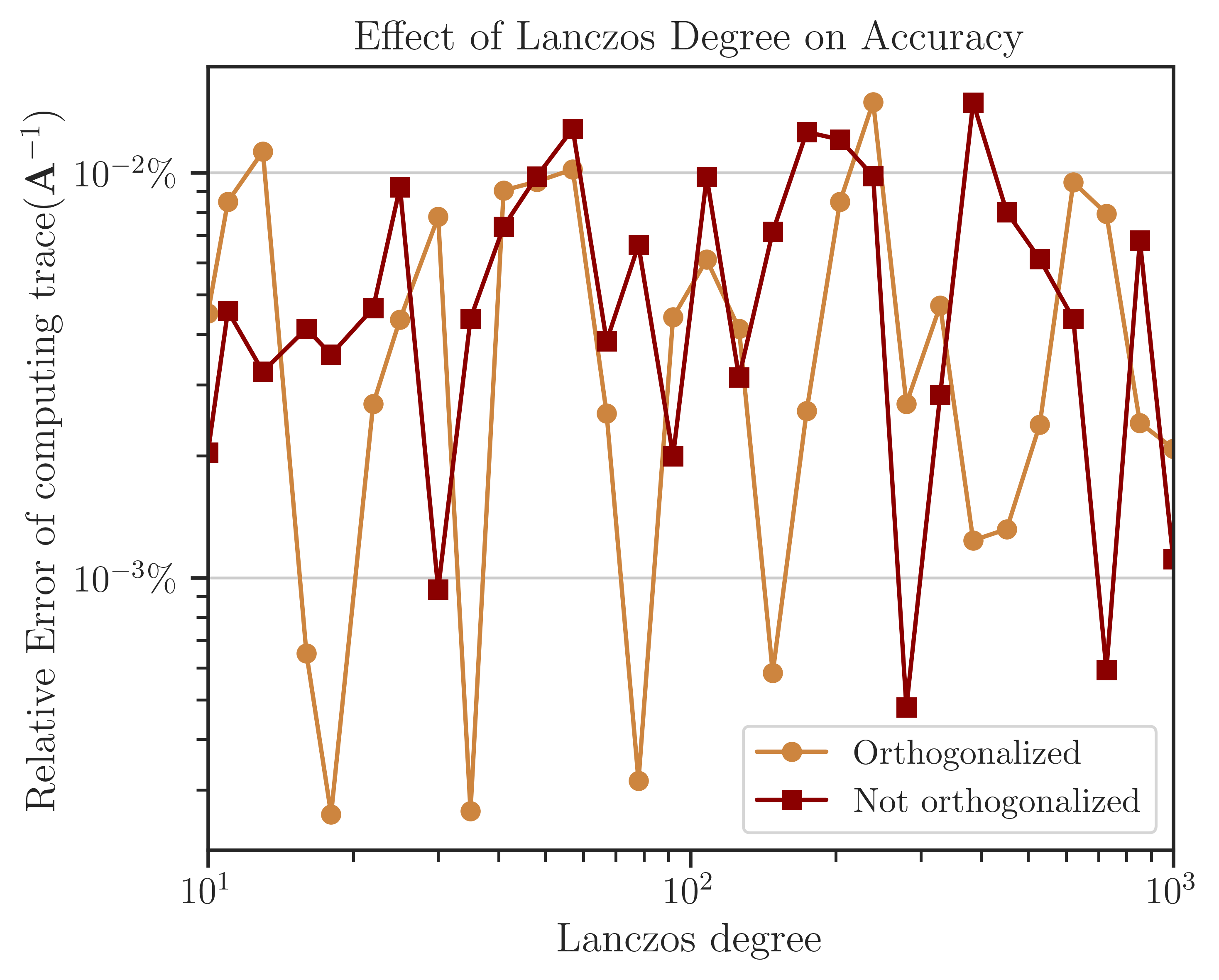

Accuracy of Computing Log-Determinant#

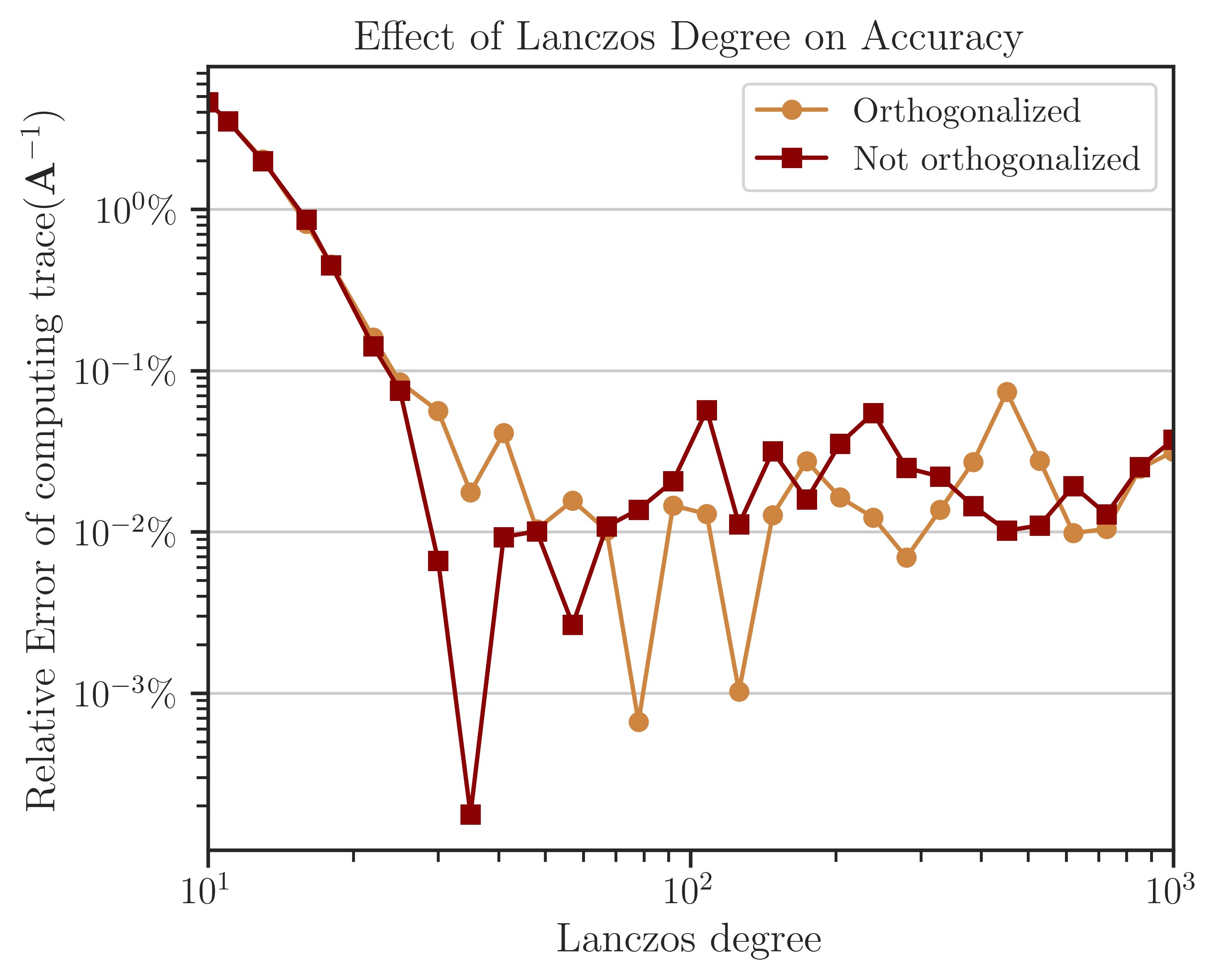

The figure below shows the error of computing log-determinant by comparing the results with the analytic value in (5). While the full-orthogonalization process in the Lanczos algorithm is an order of magnitude slower than without using orthogonalization, it provides a higher accuracy by an order of magnitude. The figure also implies that only a few Lanczos iterations, \(l = 30 \sim 60\) are sufficient to obtain a reasonable order of accuracy. This result holds for most applications.

Process Time of Computing Trace of Inverse#

The above tests were also performed on the practical matrices (see Test on Practical Matrices). The figure below corresponds to computing the race of inverse of the matrix jnlbrng1 with \(n = 40000\). The results are very similar to the previous test on simple matrices. Namely, the complexity of the process with and without orthogonalization is \(\mathcal{O}(l^2)\) and \(\mathcal{O}(l)\), respectively. Also, orthogonalization is by an order of magnitude slower than without orthogonalization and the effect of the cache memory bandwidth can be seen at \(l > 300\).;

Accuracy of Computing Trace of Inverse#

The error of computing the trace of inverse of the test matrix is shown below, which is obtained by comparison of the results with the Cholesky method (not shown here). The difference between the error of the process with and without orthogonalization is insignificant, hence, one may not use orthogonalization as it is effectively faster. Also, as suggested by the figure’s results, a practical choice for the number of Lanczos iterations is \(l = 20 \sim 50\) for most applications.

How to Reproduce Results#

Prepare Matrix Data#

Download all the above-mentioned sparse matrices from SuiteSparse Matrix Collection. For instance, download

Queen_4147.matfromQueen_4147.Run

/imate/benchmark/matrices/read_matrix.mto extract sparse matrix data fromQueen_4147.mat:read_matrix('Queen_4147.mat');

Run

/imate/benchmark/matrices/read_matrix.pyto convert the outputs of the above script to generate a python pickle file:read_matrix.py Queen_4147 float32 # to generate 32-bit data read_matrix.py Queen_4147 float64 # to generate 64-bit data read_matrix.py Queen_4147 float128 # to generate 128-bit data

The output of the above script will be written in

/imate/benchmark/matrices/.

Perform Numerical Test#

Run Locally#

Run

/imate/benchmark/scripts/compare_methods_analytic_matrix.pyto reproduce results for Toeplitz matrices as followscd /imate/benchmark/scripts python ./compare_methods_analytic_matrix.py -a -f logdet # log-determinant test python ./compare_methods_analytic_matrix.py -a -f traceinv # trace of inverse test

Run

/imate/benchmark/scripts/compare_methods_practical_matrix.pyto reproduce results for practical matrices as followscd /imate/benchmark/scripts python ./compare_methods_practical_matrix.py -a -f logdet # log-determinant test

Run

/imate/benchmark/scripts/vary_lanczos_degree_analytic_matrix.pyto reproduce results for varying algorithm parameters on simple matrices:cd /imate/benchmark/scripts python ./vary_lanczos_degree_analytic_matrix.py

Run

/imate/benchmark/scripts/vary_lanczos_degree_practical_matrix.pyto reproduce results for varying algorithm parameters on practical matrices:cd /imate/benchmark/scripts python ./vary_lanczos_degree_practical_matrix.py

Submit to Cluster with SLURM#

Submit

/imate/benchmark/scripts/jobfile_compare_methods_analytic_matrix_logdet.shto reproduce results of log-determinant of simple matrices:cd /imate/benchmark/jobfiles sbatch ./jobfile_compare_methods_analytic_matrix_logdet.sh

Submit

/imate/benchmark/scripts/jobfile_compare_methods_analytic_matrix_traceinv.shto reproduce results of the trace of inverse of simple matrices:cd /imate/benchmark/jobfiles sbatch ./jobfile_compare_methods_analytic_matrix_traceinv.sh

Submit

/imate/benchmark/scripts/jobfile_compare_methods_practical_matrix_logdet.shto reproduce results of log-determinant of simple matrices:cd /imate/benchmark/jobfiles sbatch ./jobfile_compare_methods_practical_matrix_logdet.sh

Submit

/imate/benchmark/scripts/jobfile_vary_lanczos_degree_analytic_matrix.shto reproduce results of varying parameters on simple matrices:cd /imate/benchmark/jobfiles sbatch ./jobfile_vary_lanczos_degree_analytic_matrix.sh

Submit

/imate/benchmark/scripts/jobfile_vary_lanczos_degree_practical_matrix.shto reproduce results of varying parameters on practical matrices:cd /imate/benchmark/jobfiles sbatch ./jobfile_vary_lanczos_degree_practical_matrix.sh

Plot Results#

Run

/imate/benchmark/notebooks/plot_compare_methods_analytic_matrix_logdet.ipynbto generate plots for computing the log-determinants of Toeplitz matrices.Run

/imate/benchmark/notebooks/plot_compare_methods_analytic_matrix_traceinv.ipynbto generate plots for computing the trace of inverse of Toeplitz matrices.Run

/imate/benchmark/notebooks/plot_compare_methods_analytic_matrix_logdet.ipynbto generate plots for computing the log-determinants of the practical matrices.Run

/imate/benchmark/notebooks/plot_vary_lanczos_degree_analytic_matrix.ipynbto generate plots for varying algorithm parameters on Toeplitz matrices.Run

/imate/benchmark/notebooks/plot_vary_lanczos_degree_practical_matrix.ipynbto generate plots for varying algorithm parameters on practical matrices.

These notebooks stores the plots as svg files in /imate/benchmark/svg_plots/.