|

imate

C++/CUDA Reference

|

|

imate

C++/CUDA Reference

|

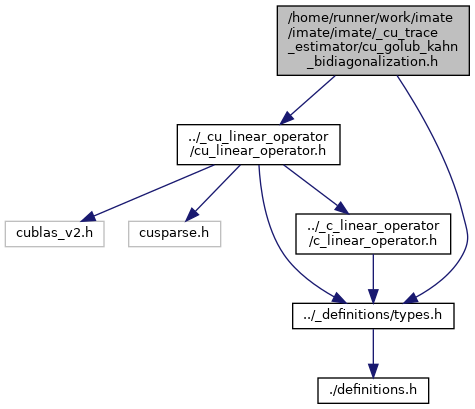

Go to the source code of this file.

Functions | |

| template<typename DataType > | |

| IndexType | cu_golub_kahn_bidiagonalization (cuLinearOperator< DataType > *A, const DataType *v, const LongIndexType n, const IndexType m, const DataType lanczos_tol, const FlagType orthogonalize, DataType *alpha, DataType *beta) |

Bi-diagonalizes the positive-definite matrix A using Golub-Kahn-Lanczos method. More... | |

| IndexType cu_golub_kahn_bidiagonalization | ( | cuLinearOperator< DataType > * | A, |

| const DataType * | v, | ||

| const LongIndexType | n, | ||

| const IndexType | m, | ||

| const DataType | lanczos_tol, | ||

| const FlagType | orthogonalize, | ||

| DataType * | alpha, | ||

| DataType * | beta | ||

| ) |

Bi-diagonalizes the positive-definite matrix A using Golub-Kahn-Lanczos method.

This method bi-diagonalizes matrix A to B using the start vector w. m is the Lanczos degree, which will be the size of square matrix B.

The output of this function are alpha (of length m) and beta (of length m+1) which are diagonal (alpha[:]) and off-diagonal (beta[1:]) elements of the bi-diagonal (m,m) symmetric and positive-definite matrix B.

A is very close to the identity matrix, the Golub-Kahn bi-diagonalization method can not find beta, as beta becomes zero. If A is not exactly identity, you may decrease the Tolerance to a very small number. However, if A is almost identity matrix, decreasing lanczos_tol will not help, and this function cannot be used.| [in] | A | A linear operator that represents a matrix of size dot() method and transposed matrix-vector operation with transpose_dot() method. This matrix should be positive-definite. |

| [in] | v | Start vector for the Lanczos tri-diagonalization. Column vector of size n. It could be generated randomly. Often it is generated by the Rademacher distribution with entries +1 and -1. |

| [in] | n | Size of the square matrix A, which is also the size of the vector v. |

| [in] | m | Lanczos degree, which is the number of Lanczos iterations. |

| [in] | lanczos_tol | The tolerance of the residual error of the Lanczos iteration. |

| [in] | orthogonalize | Indicates whether to orthogonalize the orthogonal eigenvectors during Lanczos recursive iterations.

|

| [out] | alpha | This is a 1D array of size m and alpha[:] constitute the diagonal elements of the bi-diagonal matrix B. This is the output and written in place. |

| [out] | beta | This is a 1D array of size m, and the elements beta[:] constitute the sup-diagonals of the bi-diagonal matrix B. This array is the output and written in place. |

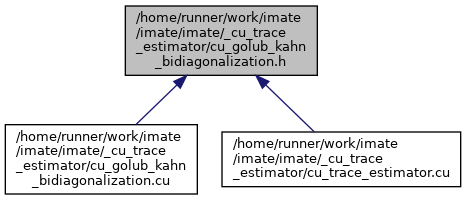

alpha and beta, and hence the output tri-diagonal matrix, is smaller. This counter keeps track of the non-zero size of alpha and beta. Definition at line 113 of file cu_golub_kahn_bidiagonalization.cu.

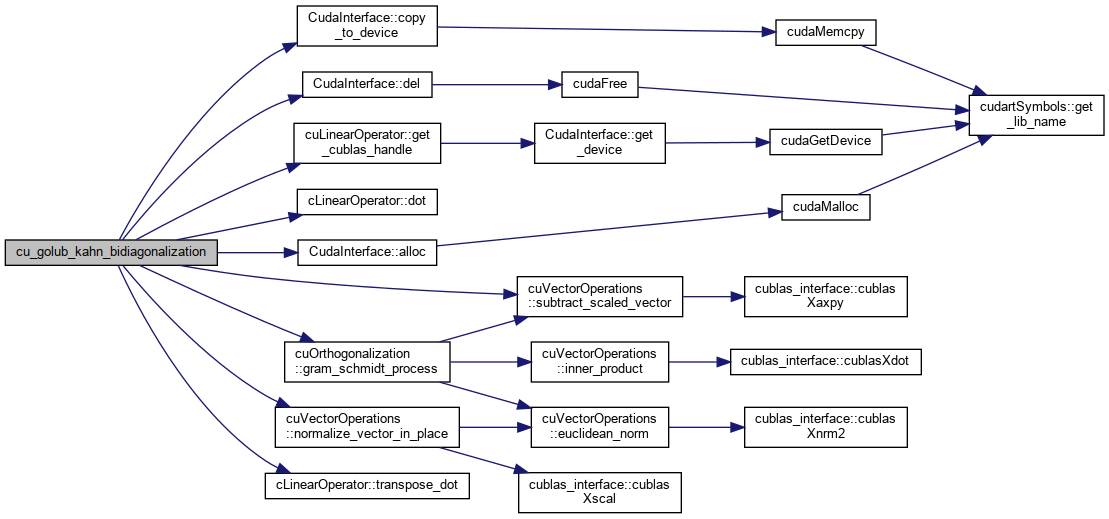

References CudaInterface< ArrayType >::alloc(), CudaInterface< ArrayType >::copy_to_device(), CudaInterface< ArrayType >::del(), cLinearOperator< DataType >::dot(), cuLinearOperator< DataType >::get_cublas_handle(), cuOrthogonalization< DataType >::gram_schmidt_process(), cuVectorOperations< DataType >::normalize_vector_in_place(), cuVectorOperations< DataType >::subtract_scaled_vector(), and cLinearOperator< DataType >::transpose_dot().

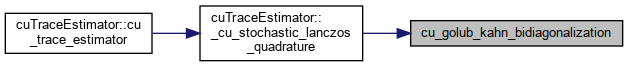

Referenced by cuTraceEstimator< DataType >::_cu_stochastic_lanczos_quadrature().