|

imate

C++/CUDA Reference

|

|

imate

C++/CUDA Reference

|

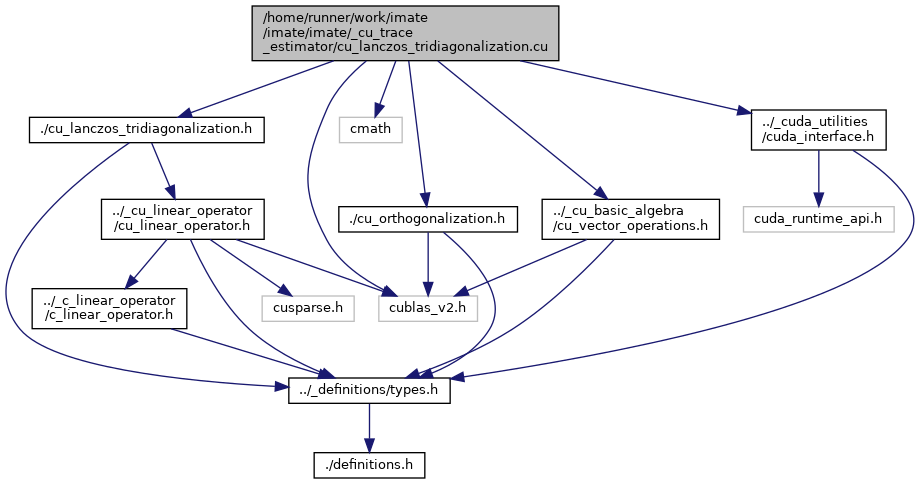

#include "./cu_lanczos_tridiagonalization.h"#include <cublas_v2.h>#include <cmath>#include "./cu_orthogonalization.h"#include "../_cu_basic_algebra/cu_vector_operations.h"#include "../_cuda_utilities/cuda_interface.h"

Go to the source code of this file.

Functions | |

| template<typename DataType > | |

| IndexType | cu_lanczos_tridiagonalization (cuLinearOperator< DataType > *A, const DataType *v, const LongIndexType n, const IndexType m, const DataType lanczos_tol, const FlagType orthogonalize, DataType *alpha, DataType *beta) |

Tri-diagonalizes matrix A to T using the start vector v. is the Lanczos degree, which will be the size of square matrix T. More... | |

| template IndexType | cu_lanczos_tridiagonalization< float > (cuLinearOperator< float > *A, const float *v, const LongIndexType n, const IndexType m, const float lanczos_tol, const FlagType orthogonalize, float *alpha, float *beta) |

| template IndexType | cu_lanczos_tridiagonalization< double > (cuLinearOperator< double > *A, const double *v, const LongIndexType n, const IndexType m, const double lanczos_tol, const FlagType orthogonalize, double *alpha, double *beta) |

| IndexType cu_lanczos_tridiagonalization | ( | cuLinearOperator< DataType > * | A, |

| const DataType * | v, | ||

| const LongIndexType | n, | ||

| const IndexType | m, | ||

| const DataType | lanczos_tol, | ||

| const FlagType | orthogonalize, | ||

| DataType * | alpha, | ||

| DataType * | beta | ||

| ) |

Tri-diagonalizes matrix A to T using the start vector v. is the Lanczos degree, which will be the size of square matrix T.

The output of this function is not an explicit matrix T, rather are the two arrays alpha of length m and beta of length m+1. The array alpha[:] represents the diagonal elements and beta[1:] represents the off-diagonal elements of the tri-diagonal (m,m) symmetric and positive-definite matrix T.

The algorithm and notations are obtained from [DEMMEL], p. 57, Algorithm 4.6 (see also [SAAD] p. 137, Algorithm 6.5). However there are four ways to implement the iteration. [PAIGE]_ has shown that the iteration that is implemented below is the most stable against loosing orthogonality of the eigenvectors. For details, see [CULLUM]_ p. 46, and p.48, particularly the algorithm denoted by A(2,7). The differences of these implementations are the order in which \( \alpha_j \) and \( \beta_j \) are defined and the order in which vectors are subtracted from r .

| [in] | A | A linear operator that represents a matrix of size dot() method. This matrix should be positive-definite. |

| [in] | v | Start vector for the Lanczos tri-diagonalization. Column vector of size c n. It could be generated randomly. Often it is generated by the Rademacher distribution with entries c +1 and -1. |

| [in] | n | Size of the square matrix A, which is also the size of the vector v. |

| [in] | m | Lanczos degree, which is the number of Lanczos iterations. |

| [in] | lanczos_tol | The tolerance of the residual error of the Lanczos iteration. |

| [in] | orthogonalize | Indicates whether to orthogonalize the orthogonal eigenvectors during Lanczos recursive iterations.

|

| [out] | alpha | This is a 1D array of size m. The array alpha[:] constitute the diagonal elements of the tri-diagonal matrix T. This is the output and written in place. |

| [out] | beta | This is a 1D array of size m. The array beta[:] constitute the off-diagonals of the tri-diagonal matrix T. This array is the output and written in place. |

alpha and beta, and hence the output tri-diagonal matrix, is smaller. This counter keeps track of the non-zero size of alpha and beta. Definition at line 119 of file cu_lanczos_tridiagonalization.cu.

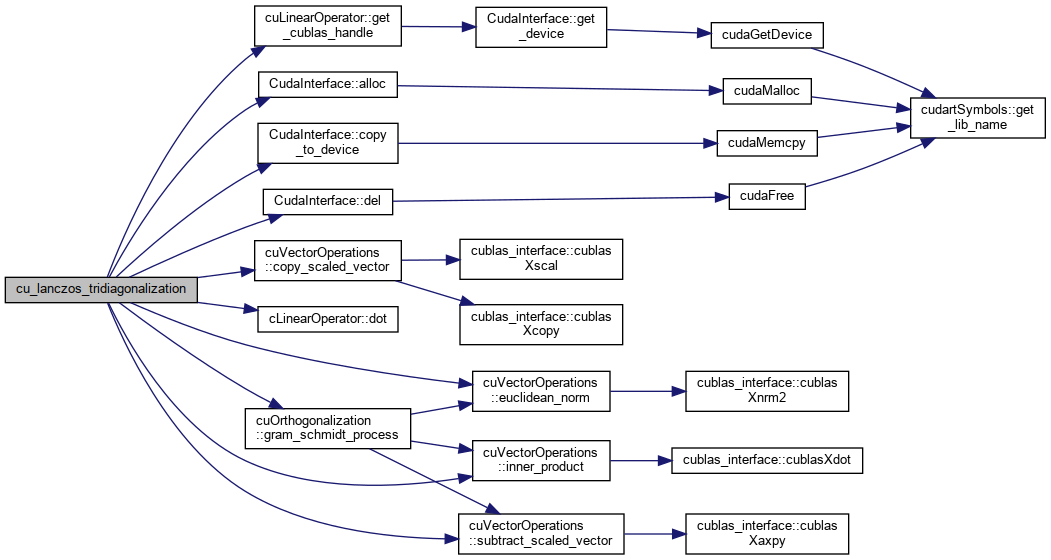

References CudaInterface< ArrayType >::alloc(), cuVectorOperations< DataType >::copy_scaled_vector(), CudaInterface< ArrayType >::copy_to_device(), CudaInterface< ArrayType >::del(), cLinearOperator< DataType >::dot(), cuVectorOperations< DataType >::euclidean_norm(), cuLinearOperator< DataType >::get_cublas_handle(), cuOrthogonalization< DataType >::gram_schmidt_process(), cuVectorOperations< DataType >::inner_product(), and cuVectorOperations< DataType >::subtract_scaled_vector().

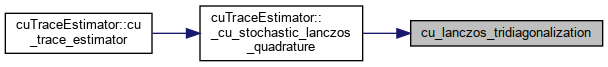

Referenced by cuTraceEstimator< DataType >::_cu_stochastic_lanczos_quadrature().

| template IndexType cu_lanczos_tridiagonalization< double > | ( | cuLinearOperator< double > * | A, |

| const double * | v, | ||

| const LongIndexType | n, | ||

| const IndexType | m, | ||

| const double | lanczos_tol, | ||

| const FlagType | orthogonalize, | ||

| double * | alpha, | ||

| double * | beta | ||

| ) |

| template IndexType cu_lanczos_tridiagonalization< float > | ( | cuLinearOperator< float > * | A, |

| const float * | v, | ||

| const LongIndexType | n, | ||

| const IndexType | m, | ||

| const float | lanczos_tol, | ||

| const FlagType | orthogonalize, | ||

| float * | alpha, | ||

| float * | beta | ||

| ) |